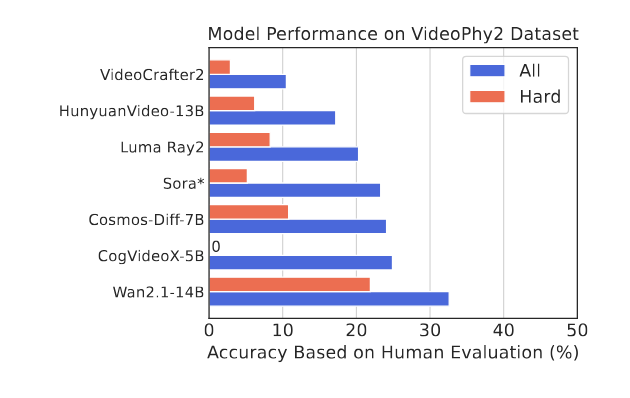

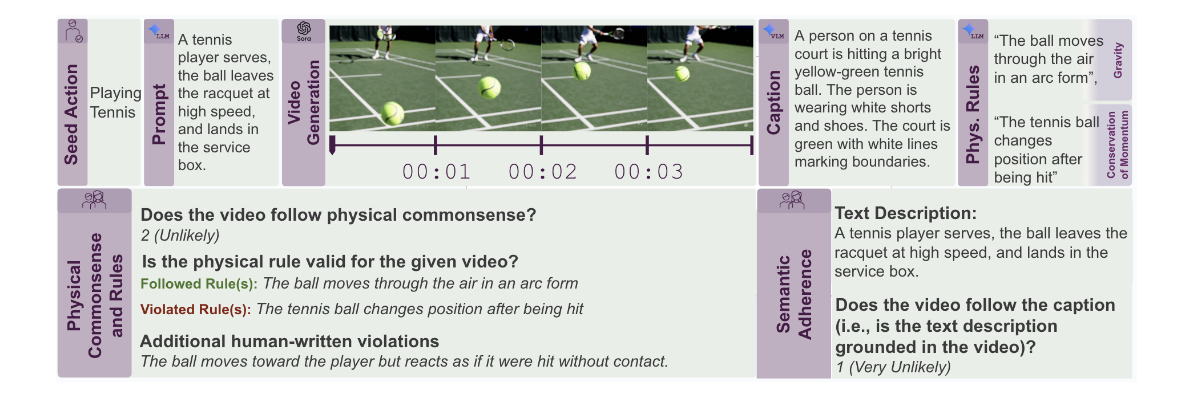

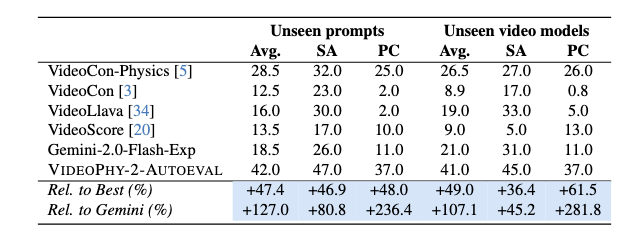

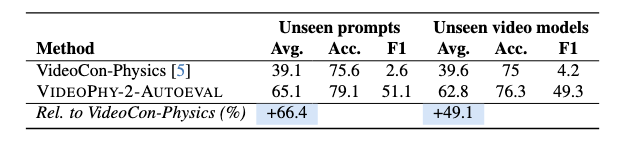

Human evaluation results on VideoPhy. We abbreviate semantic adherence as SA, physical commonsense as PC. SA, PC indicates the percentage of the instances for which SA=1 and PC=1.

Open Models

| # | Model | Source | All | Hard | PA | OI |

| 1 | Wan2.1-14B | Open | 32.6 | 21.9 | 31.5 | 36.2 |

| 2 | CogVideoX-5B | Open | 25.0 | 0.0 | 24.6 | 26.1 |

| 3 | Cosmos-Diff-7B | Open | 24.1 | 10.9 | 22.6 | 27.4 |

| 4 | Hunyuan-13B | Open | 17.2 | 6.2 | 17.6 | 15.9 |

| 5 | VideoCrafter-2 | Open | 10.5 | 2.9 | 10.1 | 13.1 |

Closed Models

| # | Model | Source | All | Hard | PA | OI |

| 1 | Ray2 | Closed | 20.3 | 8.3 | 21.0 | 18.5 |

| 2 | Sora | Closed | 23.3 | 5.3 | 22.2 | 26.7 |

🚨 To submit your results to the leaderboard, please send to this email with your csv with video URL and captions from the model builders for human/automatic evaluation.